How to Optimize Data Analysis with AI without Writing 3 Lines of Code sets the stage for this enthralling narrative, offering readers a glimpse into a story that is rich in detail and brimming with originality from the outset.

This guide aims to demystify the intersection of artificial intelligence and data processing, showcasing how individuals can leverage AI tools to enhance their data analysis capabilities without the need for extensive coding skills. By understanding the fundamental role of AI in data tasks, users can unlock new potentials while avoiding common misconceptions that often deter them from embracing these advanced technologies.

Introduction to AI in Data Processing

Artificial intelligence (AI) is revolutionizing the field of data processing by enhancing the way we analyze and interpret vast amounts of information. In today’s data-driven world, AI tools provide the capability to streamline workflows, uncover insights, and automate tasks, making them integral to any data-centric organization. This evolution enables businesses and individuals to leverage data effectively without needing extensive coding skills.The incorporation of AI into data tasks offers a range of benefits, particularly for non-technical users.

By utilizing intuitive interfaces and automated processes, AI tools allow users to engage with data in meaningful ways without requiring a deep understanding of programming languages. This democratization of data analysis empowers a broader audience to make data-driven decisions while saving time and resources.

Benefits of Utilizing AI Without Extensive Coding

Several advantages emerge from using AI for data processing tasks, especially for those who may not be proficient in coding. The following points highlight the key benefits:

1. User-Friendly Interfaces

Modern AI tools often come with graphical user interfaces that simplify data manipulation. Users can perform complex analyses through drag-and-drop functionalities rather than writing code.

2. Automated Insights

AI algorithms can automatically identify trends and patterns within datasets, providing users with actionable insights without requiring extensive data manipulation skills.

3. Time Efficiency

By automating routine data tasks, AI significantly reduces the time spent on data collection and analysis, enabling quicker decision-making and strategic planning.

4. Scalability

AI tools can handle large datasets that would be cumbersome to analyze manually. This scalability ensures that users can process data of any size without getting bogged down by coding complexities.

5. Enhanced Accuracy

AI systems often employ advanced analytics techniques that minimize human error, resulting in more accurate data interpretations.

AI enables a broader audience to engage with data-driven decisions, shifting the traditional paradigm of data analysis.

Common Misconceptions About AI and Coding Requirements

Despite the advancements in AI technology, there are prevalent misconceptions regarding the necessity of coding skills. Addressing these misunderstandings is crucial for encouraging wider adoption of AI tools:

- Many believe that leveraging AI for data analysis requires a strong background in programming. However, numerous user-friendly platforms exist that allow users to perform sophisticated analyses without writing a single line of code.

- Another misconception is that AI can replace human intuition entirely in data analysis. While AI excels at identifying patterns within data, human oversight remains essential for contextual understanding and strategic decision-making.

- Some individuals assume that using AI tools eliminates the need for data literacy. In reality, understanding data concepts is still important to effectively interpret AI-generated insights and make informed decisions.

By clarifying these misconceptions, organizations can better utilize AI’s potential, fostering an environment where data-driven insights are accessible to all team members, regardless of their technical expertise.

Tools for Non-Coders

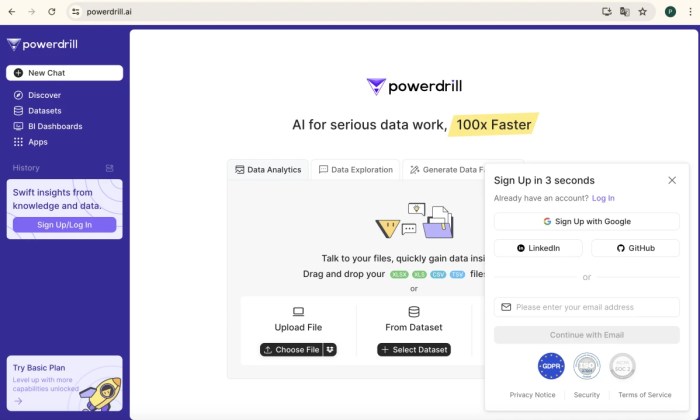

In the realm of data analysis, the rise of AI has opened doors for individuals without coding skills to engage in powerful data processing. With a range of user-friendly AI tools available, non-coders can effectively harness the capabilities of artificial intelligence to derive insights from their data. This section Artikels some of the most popular AI tools that facilitate data processing without requiring any programming knowledge.Many AI tools have emerged, specifically designed to cater to non-coders.

These tools often feature intuitive interfaces and drag-and-drop functionalities that simplify the data analysis process. The following list highlights some of the most popular AI tools for non-coders, each with its unique features and strengths.

Popular AI Tools for Data Processing

These tools empower users to perform data analysis tasks effortlessly, removing the barrier of coding from the equation.

- Google AutoML: A part of Google Cloud, AutoML allows users to build custom machine learning models with minimal effort. Its easy-to-use interface and automated processes make it suitable for users with little technical background.

- Tableau: Known for its data visualization capabilities, Tableau also offers AI-driven analytics that assist users in identifying trends and patterns through simple drag-and-drop options.

- Microsoft Power BI: With its powerful data visualization features, Power BI integrates AI capabilities that help users analyze data without needing to write code.

- RapidMiner: This platform offers a visual interface for building predictive models and conducting data analysis. Users can utilize a variety of pre-built models to perform complex analysis easily.

- KNIME: Known for its open-source data analytics platform, KNIME allows users to create data workflows visually, enabling non-coders to engage in advanced data processing.

To better understand the capabilities of these tools, a comparison of their features is essential. The table below summarizes their key attributes, focusing on ease of use and functionality.

| Tool | Ease of Use | Key Features |

|---|---|---|

| Google AutoML | Very Easy | Custom model training, image and text recognition |

| Tableau | Easy | Data visualization, dashboard creation |

| Microsoft Power BI | Easy | Data modeling, report sharing, real-time dashboards |

| RapidMiner | Moderate | Predictive analytics, model validation |

| KNIME | Moderate | Data workflow design, integration with various data sources |

For beginners interested in leveraging one of these AI tools, setting up Google AutoML offers a straightforward entry point. Here’s a step-by-step guide to getting started:

1. Create a Google Cloud Account

Begin by signing up for a Google Cloud account if you don’t already have one. Google offers a free tier for new users.

2. Navigate to AutoML

Once logged in, head to the Google Cloud Console and select the AutoML option under the AI and ML section.

3. Select Your Data Type

Choose the appropriate AutoML product based on your data type, such as AutoML Vision for images or AutoML Natural Language for text.

4. Upload Your Data

Follow the prompts to upload your dataset. Ensure your data is clean and correctly formatted for the best results.

5. Train Your Model

Use the guided interface to train your model. Google AutoML automates much of the process, making it easier for novices to achieve results.

6. Evaluate and Use Your Model

After training, assess your model’s performance through the built-in metrics. Once satisfied, you can deploy it for use in applications or further analysis.By utilizing these tools, anyone can optimize their data analysis processes and gain valuable insights without needing to dive into complex coding. The accessibility of AI tools has truly democratized data analysis, making it possible for everyone to participate in the data-driven landscape.

Best Practices for Data Preparation

Effective data preparation is a vital step in any data analysis project. It ensures that the data is clean, structured, and ready for analysis, which ultimately leads to more accurate insights and better decision-making. Poorly prepared data can lead to misleading results, wasted resources, and reduced productivity. Understanding and implementing best practices in data preparation can significantly enhance the overall data analysis process.The quality of data directly impacts the reliability of analysis outcomes.

High-quality data is accurate, complete, consistent, and timely. AI plays a crucial role in improving data quality by automating data cleaning processes, identifying anomalies, and standardizing formats. By leveraging AI tools, non-coders can enhance their data preparation capabilities without needing extensive programming knowledge, making it easier to focus on analysis rather than getting bogged down by data issues.

Steps for Preparing Data Effectively for Analysis

Preparing data for analysis involves several key steps that can help ensure a successful outcome. These steps provide a structured approach to handling data and can be summarized as follows:

- Data Collection: Gather data from various sources, ensuring it is relevant and comprehensive.

- Data Cleaning: Identify and correct errors or inconsistencies in the data. This may include handling missing values, duplicates, and outliers.

- Data Transformation: Convert data into a suitable format for analysis. This may involve normalization, aggregation, and encoding categorical variables.

- Data Validation: Verify that the data is accurate and meets the required standards for the intended analysis.

- Data Integration: Combine data from different sources to create a unified dataset that provides a holistic view.

Implementing these steps ensures that the data is not only ready for analysis but also maximizes its potential to yield valuable insights.

Importance of Data Quality and AI Assistance

Data quality is paramount in achieving reliable and actionable insights. High-quality data reduces the risk of errors during analysis and leads to more valid conclusions. AI can significantly enhance data quality through various automated processes, ensuring that the data is clean and ready for analysis. AI algorithms can detect patterns and inconsistencies that might go unnoticed, thereby improving overall data integrity.AI technologies, such as machine learning and natural language processing, can assist in automating data cleaning tasks, such as detecting and imputing missing values, correcting typos, and identifying anomalies.

This not only saves time but also ensures a higher level of accuracy in the prepared dataset.

Comparison of Data Preparation Techniques

Different data preparation techniques offer various advantages depending on the context and requirements of the analysis. Understanding these techniques can help in selecting the most appropriate method for specific data challenges. Below is a comparison table highlighting some common data preparation techniques along with their advantages.

| Technique | Advantages |

|---|---|

| Manual Data Cleaning |

|

| Automated Data Cleaning |

|

| Data Normalization |

|

| Data Transformation |

|

Visualization Techniques with AI

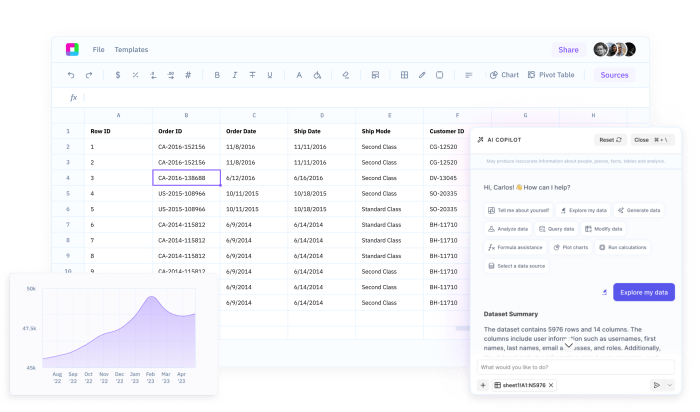

Data visualization is a key aspect of data analysis, transforming complex data sets into visual formats that are easier to comprehend. With the advent of AI, the capabilities of data visualization have expanded significantly, enabling users to uncover insights that may not be immediately apparent. AI can automate the visualization process, suggest the most appropriate formats for presenting data, and even enhance the aesthetic appeal of visualizations, making them more impactful and engaging.AI can enhance data visualization efforts by analyzing data patterns and automatically creating visuals that represent these patterns in a clear and concise manner.

For instance, AI algorithms can identify trends, clusters, and outliers in data, allowing for the creation of dynamic visualizations that change in real-time as new data is introduced. This not only saves time but also aids in discovering insights that analysts might miss when creating visualizations manually.

AI-Driven Visualization Tools and Their Functionalities

A variety of AI-driven visualization tools are available that simplify the process of creating sophisticated visual representations of data. These tools often come equipped with functionalities that cater to both novice users and seasoned data scientists. Some popular options include:

- Tableau: This widely-used tool incorporates AI through features like Explain Data, which automatically provides insights and explanations for trends in your visualizations, streamlining the investigative process.

- Power BI: Microsoft’s Power BI leverages AI capabilities to generate natural language queries, allowing users to ask questions about their data and receive visual answers instantly. Its integrations with Azure Machine Learning facilitate advanced predictive analyses.

- Qlik Sense: Qlik utilizes associative analytics and AI to suggest visualizations based on the data provided. Its augmented intelligence capability helps users understand their data context and relationships.

- Google Data Studio: This tool offers AI features that help in automatically suggesting the best visualization types for the data at hand, allowing users to create compelling reports with minimal effort.

Best Practices for Creating Effective Visual Representations of Data

When creating visual representations of data, adhering to best practices can significantly improve clarity and impact. These practices ensure that visualizations communicate the intended message effectively and are accessible to a broader audience. Here are some key points to consider:

- Know Your Audience: Tailor your visuals to suit the knowledge level and interests of your target audience. This ensures that your visualizations resonate and communicate the intended insights clearly.

- Prioritize Clarity and Simplicity: Avoid cluttering visuals with excessive information. Focus on key data points and use whitespace effectively to enhance readability.

- Use Appropriate Visualization Types: Different data types require different visualization approaches. Select charts, graphs, or maps that best represent the data relationships you aim to illustrate.

- Incorporate AI Insights: Leverage AI-generated insights and suggestions to enhance your visualizations. Allow AI to guide you in identifying trends or anomalies that you might overlook.

- Test for Accessibility: Ensure your visualizations are accessible to everyone, including those with disabilities. This includes using color combinations that are friendly for color-blind individuals and providing alternative text for images.

Effective data visualization is not just about making data pretty. It’s about making data understandable.

Automating Insights Generation

In the realm of data analysis, AI has emerged as a transformative force, particularly in automating the generation of insights from complex data sets. This shift not only enhances efficiency but also opens up opportunities for deeper analysis that were previously unattainable for non-technical users.Automating insights generation with AI involves leveraging algorithms that can sift through vast amounts of data, identify patterns, and derive meaningful conclusions without the need for manual intervention.

By using machine learning and natural language processing, AI can provide insights quickly and accurately, thus significantly reducing the time and effort required for traditional analysis methods.

Comparison of Manual Insight Generation and AI Automation

The contrast between manual and AI-driven insight generation is stark, especially in the areas of time efficiency and accuracy. Manual processes often require substantial human effort, leading to longer turnaround times for reports and insights. In contrast, AI can process large data sets in real-time, providing instant feedback and insights. To highlight this difference, consider the following points:

- Time Efficiency: AI can analyze data in minutes, while manual analysis might take days or weeks, depending on the data volume.

- Consistency: AI algorithms ensure consistent analysis, minimizing human error which can vary across different analysts.

- Scalability: AI can easily adapt to larger data sets, whereas manual analysis often struggles to keep pace with increasing data volumes.

- Deeper Insights: AI can uncover hidden patterns and correlations that might be missed in a manual review, leading to richer insights.

A notable case study illustrating the power of AI in automating insights generation comes from a large retail chain that implemented AI-powered analytics. By adopting machine learning algorithms, they were able to analyze customer purchasing data and identify trends in real-time, leading to a more responsive inventory management system. The results were impressive: not only did they reduce stock-out occurrences by 30%, but they also improved overall customer satisfaction scores significantly.

“In the first quarter alone, our AI system unlocked insights that drove a 20% increase in sales, demonstrating the profound impact of automating insights generation.”

Integrating AI with Existing Systems

Integrating AI tools into existing data workflows can significantly enhance operational efficiency and decision-making. However, the process requires careful planning and execution to ensure a seamless transition without disrupting established systems. This section Artikels effective methods for integrating AI into existing frameworks, addresses common challenges, and presents a structured approach to facilitate this integration.

Methods for Integration

Integrating AI tools into current data workflows involves several key methods. These methods ensure that AI technologies complement existing processes rather than replace them. The following points highlight effective integration strategies:

- API Integration: Leveraging Application Programming Interfaces (APIs) allows AI tools to communicate with existing systems, facilitating data exchange and processing without major overhauls.

- Data Pipeline Management: Incorporating AI into data pipelines helps automate data collection, cleaning, and processing, enhancing the speed and accuracy of data analysis.

- Modular Implementation: Integrating AI in a modular fashion allows organizations to introduce AI capabilities gradually, minimizing disruption while assessing performance at each step.

- Cloud Solutions: Utilizing cloud-based AI services can simplify integration by offering scalable resources and compatibility with various platforms.

Challenges and Solutions

While integrating AI into existing systems offers numerous advantages, organizations often face challenges. Being aware of these issues and potential solutions can facilitate a smoother integration process:

- Data Compatibility: Existing data formats may not align with AI systems.

Ensuring data is standardized and accessible is crucial for effective integration.

- Change Resistance: Employees may resist adopting new technologies.

Training and clear communication about the benefits of AI can alleviate these concerns.

- Technical Limitations: Legacy systems may lack the capacity to support advanced AI tools.

Assessing infrastructure and making necessary upgrades is essential for successful integration.

- Cost Implications: Initial costs can be high.

Developing a phased investment plan can help manage expenses while gradually realizing AI benefits.

Structured Plan for Smooth Integration

A structured plan is vital for effective AI integration. Here’s a step-by-step approach, illustrated with an example of a retail company integrating an AI-driven inventory management system:

- Assessment: Conduct a comprehensive analysis of current systems, identifying pain points and integration opportunities.

- Planning: Develop a detailed roadmap that defines the integration process, timelines, and responsible parties.

- Pilot Testing: Implement a pilot program to test the AI system in a controlled environment, allowing for adjustments based on initial feedback.

- Deployment: Roll out the AI system across the organization, ensuring all stakeholders are informed and trained.

- Monitoring and Optimization: Continuously monitor system performance, collecting feedback to refine processes and optimize AI functionality.

This structured approach ensures that AI tools enhance existing workflows, ultimately leading to improved performance and decision-making in the organization.

Ethical Considerations in AI Data Usage

In an era where artificial intelligence is revolutionizing data analysis, ethical considerations in AI data usage are paramount. As organizations increasingly rely on AI systems to process and interpret data, the responsibility to ensure these systems operate fairly and transparently becomes more crucial. Understanding these ethical dimensions helps safeguard both the integrity of the data and the trust of the users interacting with AI technologies.Transparency and accountability in AI systems are essential for fostering trust among users and stakeholders.

When AI systems are utilized for data-related tasks, the processes behind their decision-making must be clear and understandable. This ensures that users can comprehend how data is being handled and what algorithms are being applied. A lack of transparency can lead to skepticism and mistrust, which can undermine the effectiveness of AI initiatives.

Key Ethical Considerations

Several key ethical considerations arise when employing AI for data tasks. Addressing these considerations not only promotes ethical usage but also enhances the overall effectiveness of AI solutions.

- Bias and Fairness: AI systems can inadvertently perpetuate biases present in training data. Continuous evaluation and refinement of data sets are necessary to mitigate biases and ensure fair outcomes.

- Data Privacy: Protecting individual privacy should be a top priority. Organizations must adhere to data privacy laws and ensure that personal data is anonymized where possible before analysis.

- Accountability: Clear accountability structures must be established to determine who is responsible for decisions made by AI systems. This includes defining roles for data scientists, developers, and decision-makers.

- Informed Consent: Users should be made aware when their data is being collected and how it will be used, allowing them to give informed consent.

Potential Risks and Mitigation Strategies

Employing AI in data analysis carries certain risks that must be identified and addressed proactively. Understanding these risks, alongside effective mitigation strategies, is crucial for ethical AI implementation.

- Risk of Misuse: AI can be used maliciously, leading to significant harm. Organizations must establish strict guidelines and monitoring to prevent misuse.

- Algorithmic Transparency: Complex AI algorithms can obscure decision-making processes. Simplifying models and employing explainable AI techniques can enhance transparency.

- Job Displacement: The automation of data analysis may lead to job losses. Organizations should invest in reskilling and upskilling their workforce to adapt to new roles created by AI technologies.

- Data Security: AI systems can be susceptible to cyberattacks. Implementing robust cybersecurity measures and conducting regular audits can help protect sensitive data.

“The ethical deployment of AI not only ensures compliance with legal standards but also enhances public trust and promotes sustainable technology adoption.”

Outcome Summary

In conclusion, optimizing data analysis with AI not only streamlines the process but also empowers users to generate insights more efficiently. As we’ve explored various tools, best practices, and ethical considerations, it’s clear that embracing AI can significantly enhance data workflows while maintaining high standards of quality and integrity. As you step into this AI-driven era, remember that you can achieve powerful results without getting tangled in complicated code.